CUDA is now relevant to consumers

Nvidia has been talking about CUDA for quite some time now—in fact, ever since the launch of the GeForce 8800 GTX—but it's not really been of much interest to general consumers until now. This was because almost all of the applications accelerated by CUDA have been focused on increasing performance with massive amounts of data – that's exactly what a GPU is great at doing.These tasks have been focused around tasks relating to oil and gas exploration, fluid dynamics, weather forecasting, financial forecasts and biomedical imaging amongst others. Just looking at that list will tell you that they're not really relevant computing tasks in the consumer space and they certainly don't have broad appeal – although the data coming from those applications might be of interest to many of us.

I've spent a lot of time talking to various Nvidia luminaries about accelerating consumer-class applications with CUDA and what became clear was that there are very few tasks that are so massively parallel that you'd want to use the GPU to effectively accelerate them. However, that doesn't mean CUDA is completely useless for us consumers because there are applications that would benefit from a massively parallel processor like GT200.

On numerous occasions in the past few months, the company has been keen to point out the more consumer-orientated software apps coming to market later this year while at the same time pointing out how relevant the software will be to consumers. Nvidia claims that there have been over 70 million CUDA-capable GPUs shipped since G80's launch, making a considerable and relevant installed base.

In fact, it's that installed base that has caught the attention of several big organisations like Adobe and Stanford University. We have already talked about Adobe's plans to accelerate certain things inside Photoshop 'Next' with the GPU, but that wasn't the only application claiming to deliver massive performance increases.

Nvidia also invited a firm called Elemental Technologies along to show off its video transcoding application, BadaBoom, which delivered some seriously impressive performance gains. We're told that the application won't be available until August, but we have been testing an alpha version of the software and not only is the performance there, but the image quality is also there as well.

We've seen speeds of well over 100 frames per second while converting DVD VOB files into h.264-encoded files designed to be played on an iPhone – that's several times faster than real time, and many times faster than a CPU transcode in theory, but what isn't clear is the actual quality settings used by BadaBoom.

With that in mind, I'm not convinced that the image quality is the highest I've ever seen, but the transcodes I've done using the applications were light years away from being classed as poor quality like Intel would have you believe. Since the application is designed to convert video to a format that works on your handheld device of choice (like iPhone/iPod, Zune, etc), having the absolute highest image quality isn't so much of a concern for me personally, but having studied the transcode quality, it was at least respectable quality. We'll no doubt do more testing with the application over time and we'll be making some side-by-side comparisons to transcodes completed on the CPU using other applications at a range of quality settings.

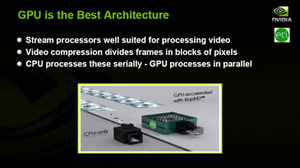

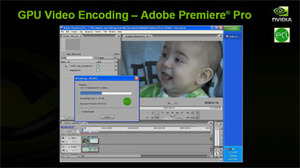

Sam Blackman, CEO of Elemental Technologies, then showed off a plug-in for Adobe Premiere Pro, which used the exact same RapiHD encoding technology as BadaBoom. The demo showed was of a 1,920 x 1,080 AVC-HD video recording encoding at speeds faster than real-time using the AVC/H.264 Main Profile – again, this is a massive speed up compared to CPU-only encoding, but we'd also like to see some higher-quality profiles (such as High Profile) too. We were told that work on these profiles was underway, but there was no timeframe given for when we can expect to see them – they probably won't be available when the plug-in is released later this year, but will be added at a later date.

Nvidia also invited Vijay Pande, the scientist behind Stanford University's Folding@Home distributed computing project – he came to show off a client for Nvidia CUDA-capable GPUs. I know many of you have been waiting for this to be released—especially since a client that ran on ATI GPUs was released just before the curtains on Nvidia's GeForce 8800-series were pulled back—and I have to say it's great news that it's here... even if we have been waiting for an age to see it!

It runs at a decent rate of knots – we've got a pre-beta client that hits between 500-600 nanoseconds per day on a GeForce GTX 280, which is definitely impressive. However, we're not entirely happy with the suggestions we've been given for comparative benchmarking across a range of hardware, so we're going to hold back from making any direct performance comparisons to other hardware for the time being.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.